Advent of 2021, Day 5 – Setting up Spark Cluster

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Series of Apache Spark posts:

- Dec 01: What is Apache Spark

- Dec 02: Installing Apache Spark

- Dec 03: Getting around CLI and WEB UI in Apache Spark

- Dec 04: Spark Architecture – Local and cluster mode

We have explore the Spark architecture and look into the differences between local and cluster mode.

So, if you navigate to your local installation of Apache-Spark (/usr/local/Cellar/apache-spark/3.2.0/bin) you can run Spark in R, Python, Scala with following commands.

For Scala

spark-shell --master local

Python

pyspark --master local

and R

sparkR --master local

and your WEB UI will change the application language accordingly.

Spark can run both by itself, or over several existing cluster managers. It currently provides several options for deployment. If you decide to use Hadoop and YARN, there is usually the installation needed to install everything on nodes. Installing Java, JavaJDK, Hadoop and setting all the needed configuration. This installation is preferred when installing several nodes. A good example and explanation is available here. you will also be installing HDFS that comes with Hadoop.

Spark Standalone Mode

Besides running Hadoop YARN, Kubernetes or Mesos, this is the simplest way to deploy Spark application on private cluster.

In local mode, WEB UI would be available at: http://localhost:4040, the standalone mode is available at http://localhost:8080.

Installing Spark Standalone mode is made simple. You copy the complied version of Spark on each node on the cluster.

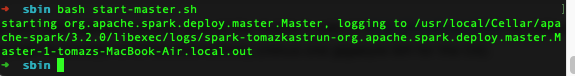

Starting a cluster manually, navigate to folder: /usr/local/Cellar/apache-spark/3.2.0/libexec/sbin and run

start-master.sh bash start-master.sh

Once started, go to URL on a master’s web UI: http://localhost:8080.

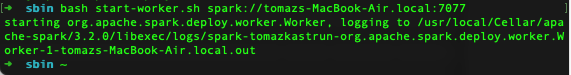

We can add now a worker by calling this command:

start-worker.sh spark://tomazs-MacBook-Air.local:7077

and the message in CLI will return:

Refresh the Spark master’s Web UI and check the worker node:

Connecting and running application

To run the application on Spark cluster, use the spark://tomazs-MacBook-Air.local:7077 URL of the master with SparkContext constructor.

Or simply run the following command (in the folder: /usr/local/Cellar/apache-spark/3.2.0/bin) and run

spark-shell --master spark://tomazs-MacBook-Air.local:7077

With spark-submit command we can run the application with Spark Standard cluster with cluster deploy mode. Navigate to /usr/local/Cellar/apache-spark/3.2.0/bin and execute:

spark-submit \ --class org.apache.spark.examples.SparkPi \ --master spark://tomazs-MacBook-Air.local:7077\ --executor-memory 20G \ --total-executor-cores 100 \ python1hello.py

With Python script as simple as:

x = 1

if x == 1:

print("Hello, x = 1.")

Tomorrow we will look into IDE and start working with the code.

Compete set of code, documents, notebooks, and all of the materials will be available at the Github repository: https://github.com/tomaztk/Spark-for-data-engineers

Happy Spark Advent of 2021!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.