Advent of 2021, Day 4 – Spark Architecture – Local and cluster mode

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Series of Apache Spark posts:

- Dec 01: What is Apache Spark

- Dec 02: Installing Apache Spark

- Dec 03: Getting around CLI and WEB UI in Apache Spark

Before diving into IDE, let’s see what kind of architecture is available in Apache Spark.

Architecture

Finding the best way to write Spark will be dependent of the language flavour. As we have mentioned, Spark runs both on Windows and Mac OS or Linux (both UNIX-like systems). And you will need Java installed to run the clusters. Spark runs on Java 8/11, Scala 2.12, Python 2.7+/3.4+ and R 3.1+. And the language flavour can also determine which IDE will be used.

Spark comes with several sample scripts available and you can run them simply by heading to CLI and calling for example the following commands for R or Python:

R:

sparkR --master local[2] spark-submit examples/src/main/r/dataframe.R

And for Python:

pyspark --master local[2] spark-submit examples/src/main/python/pi.py 10

But each time, we need to initialize and run the cluster in order to have commands up and running.

Running in local mode and running in a cluster

Local mode

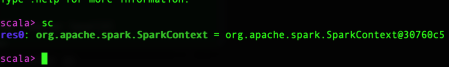

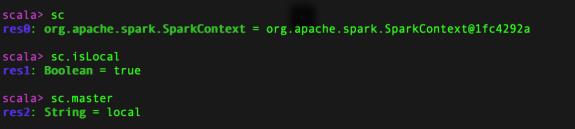

The Spark cluster mode is available immediately upon running the shell. Simply run sc and you will get the context information:

In addition, you can run also:

sc.local sc.master

And you will receive the information about the context and execution mode.

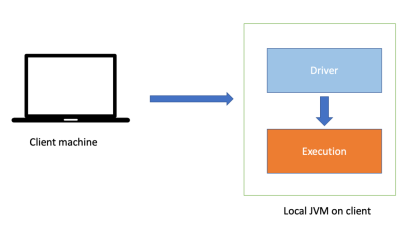

Local mode is the default mode and does not require any resource management. When you start spark-shell command, it is already up and running. Local mode are also good for testing purposes, quick setup scenarios and have number of partitions equals to number of CPU on local machine. You can start in local mode with any of the following commands:

spark-shell spark-shell --master local spark-shell -- master local[*] spark-shell -- master local[3]

By the default the spark-shell will execute in local mode, and you can specify the master argument with local attribute with how many threads you want Spark application to be running; remember, Spark is optimised for parallel computation. Spark in local mode will run with single thread. With passing the number of CPU to local attribute, you can execute in multi-threaded computation.

Cluster Mode

When it comes to cluster mode, consider the following components:

When running Spark in cluster mode, we can refer to as running spark application in set of processes on a cluster(s), that are coordinated by driver. The driver program is using the SparkContext object to connect to different types of cluster managers. These managers can be:

– Standalone cluster manager (Spark’s own manager that is deployed on private cluster)

– Apache Mesos cluster manager

– Hadoop YARN cluster manager

– Kubernetes cluster manager.

Cluster manager is responsible to allocate the resources across the Spark Application. This architecture has several advantages. Each application run is isolated from other application run, because each gets its own executor process. Driver schedules its own tasks and executes it in different application run on different JVM. Downside is, that data can not be shared across different Spark applications, without being written (RDD) to a storage system, that is outside of this particular application

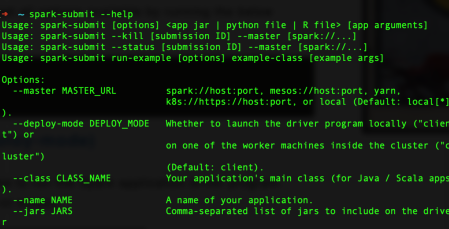

Running Spark in cluster mode, we will need to run with spark-submit command and not spark-shell command. The general code is:

spark-submit \ --class <main-class> \ --master <master-url> \ --deploy-mode <deploy-mode> \ --conf <key>=<value> \ ... # other options <application-jar> \ [application-arguments]

And commonly used are:--class: The entry point for your application--master: The master URL for the cluster --deploy-mode: Whether to deploy your driver on the worker nodes (cluster) or locally as an external client (client) (default: client)

Simple spark-submit --help will get you additional information:

Tomorrow we will look into the Spark-submit and cluster installation.

Compete set of code, documents, notebooks, and all of the materials will be available at the Github repository: https://github.com/tomaztk/Spark-for-data-engineers

Happy Spark Advent of 2021!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.