Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Series of Azure Databricks posts:

- Dec 01: What is Azure Databricks

- Dec 02: How to get started with Azure Databricks

- Dec 03: Getting to know the workspace and Azure Databricks platform

- Dec 04: Creating your first Azure Databricks cluster

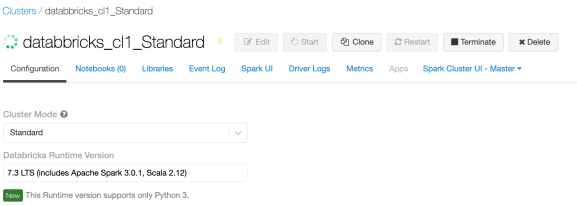

Yesterday we have unveiled couple of concepts about the workers, drivers and how autoscaling works. In order to explore the services behind, start up the cluster, we have created yesterday (it it was automatically terminated or you have stopped it manually).

Cluster is starting up (when is started, the green loading circle will become full):

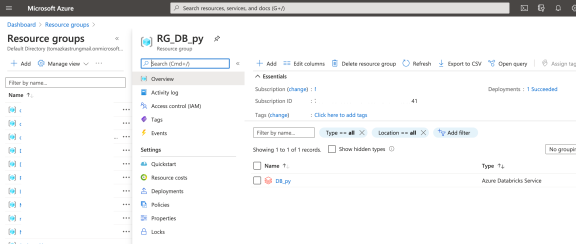

My Cluster is Standard DS3_v2 cluster (4 cores) with Min 2 and Max 8 workers. Same applies for the driver. Once the cluster is up and running, go to Azure Portal. Look for your resource group that you have created it at the beginning (Day 2) when we started the Databricks Service. I have named my Resource group “RG_DB_py” (naming is importat! RG – ResourceGroup; DB – Service DataBricks; py – my project name). Search for the correct resource:

And Select “Resource Groups” and find your resource group. I have a lot of resource groups, since I try to bundle the projects to a small groups that are closely related:

Find yours and select it and you will find the Azure Databricks service that belongs to this resource group.

Databricks creates additional (automatically generated) resource group to hold all the services (storage, VM, network, etc.). Follow the naming convention:

RG_DB_py – is my resource group. What Azure does in this case, it prefixes and suffices your resource group name as: databricks_rg_DB_py_npkw4cltqrcxe. Prefix will always be “databricks_rg” and suffix will be 13-characters random string for uniqueness. In my case: npkw4cltqrcxe. Why separate resource group? It used to be under the same resource group, but decoupling and having services in separate group makes it easier to start/stop services, manage IAM, create pool and scale. Find your resource group and see what is insight:

In detail list you will find following resources (in accordance with my standard DS3_v2 Cluster):

- Disk (9x Resources)

- Network Interface (3x resources)

- Network Security group (1x resource)

- Public IP address (3x resources)

- Storage account (1x resource)

- Virtual Machine (3x resources)

- Virtual network (1x resource)

Inspect the naming of these resources, you can see that the names are guid based, but the names are repeating through different resources and can easily be bundled together. Drawing the components together to get a full picture of it:

At a high level, the Azure Databricks service manages worker nodes and driver node in the separate resource group, that is tight to the same Azure subscription (for easier scalability and management). The platform or “appliance” or “managed service” is deployed as an set of Azure resources and Databricks manages all other aspects. The additional VNet, Security groups, IP addresses, and storage accounts are ready to be used for end user and managed through Azure Databricks Portal (UI). Storage is also replicated (geo redundant replication) for disaster scenarios and fault tolerance. Even when cluster is turned off, the data is persisted in storage.

Cluster is a virtual machine that has a blob storage attached to it. Virtual machine is rocking Linux Ubuntu (16.04 as of writing this) and it has 4 vCPUs and 14GiB of RAM. The workers are using two Virtual Machines. And the same Virtual machine is reserved for the driver. This is what we have set on Day 2.

Since each VM machine is the same (for Worker and Driver), the workers can be scaled up based on the vCPU. Two VM for Workers, with 4 cores each, is maximum 8 workers. So each vCPU / Core is considered one worker. And the Driver machine (also VM with Linux Ubuntu) is a manager machine for load distribution among the workers.

Each Virtual machine is set with public and private sub-net and all are mapped together in Virtual network (VNet) for secure connectivity and communication of work loads and data results. And Each VM has a dedicated public IP address for communication with other services or Databricks Connect tools (I will talk about this in later posts).

Disks are also bundled in three types, each for one VM. These types are:

- Scratch Volume

- Container Root Volume

- Standard Volume

Each type has a specific function but all are designed for optimised performance data caching, especially for delta caching. This means for faster data reading, creating copies of remote files in nodes’ local storage using and using a fast intermediate data format. The data is cached automatically. Even when file has to be fetched from a remote location. This performs well also for repetitive and successive reads. Delta caching (as part of Spark caching)

This is supported for reading only Parquet files in DBFS, HDFS, Azure Blob storage, Azure Data Lake Storage Gen1, and Azure Data Lake Storage Gen2. Optimized storage (Spark caching) does not uspport file types as CSV, JSON, TXT, ORC, XML..

When request is pushed from the Databricks Portal (UI) the main driver accepts the requests and by using spark jobs, pushes the workload down to each node. Each node has a shards and copies of the data or it it gets through DBFS from Blob Storage and executes the job. After execution the summary / results of each worker node is summed and gathered again by driver. Driver node returns the results in fashionable manner back to UI.

The more worker nodes you have, the more “parallel” the request can be executed. And the more workers you have available (or in ready mode) the more you can “scale” your workloads.

Tomorrow we will start with working our way up to importing and storing the data and see how it is stored on blob storage and explore different type of storages that Azure Databricks provides.

Complete set of code and Notebooks will be available at the Github repository.

Happy Coding and Stay Healthy!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.