Advent of 2020, Day 26 – Connecting Azure Machine Learning Services Workspace and Azure Databricks

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Series of Azure Databricks posts:

- Dec 01: What is Azure Databricks

- Dec 02: How to get started with Azure Databricks

- Dec 03: Getting to know the workspace and Azure Databricks platform

- Dec 04: Creating your first Azure Databricks cluster

- Dec 05: Understanding Azure Databricks cluster architecture, workers, drivers and jobs

- Dec 06: Importing and storing data to Azure Databricks

- Dec 07: Starting with Databricks notebooks and loading data to DBFS

- Dec 08: Using Databricks CLI and DBFS CLI for file upload

- Dec 09: Connect to Azure Blob storage using Notebooks in Azure Databricks

- Dec 10: Using Azure Databricks Notebooks with SQL for Data engineering tasks

- Dec 11: Using Azure Databricks Notebooks with R Language for data analytics

- Dec 12: Using Azure Databricks Notebooks with Python Language for data analytics

- Dec 13: Using Python Databricks Koalas with Azure Databricks

- Dec 14: From configuration to execution of Databricks jobs

- Dec 15: Databricks Spark UI, Event Logs, Driver logs and Metrics

- Dec 16: Databricks experiments, models and MLFlow

- Dec 17: End-to-End Machine learning project in Azure Databricks

- Dec 18: Using Azure Data Factory with Azure Databricks

- Dec 19: Using Azure Data Factory with Azure Databricks for merging CSV files

- Dec 20: Orchestrating multiple notebooks with Azure Databricks

- Dec 21: Using Scala with Spark Core API in Azure Databricks

- Dec 22: Using Spark SQL and DataFrames in Azure Databricks

- Dec 23: Using Spark Streaming in Azure Databricks

- Dec 24: Using Spark MLlib for Machine Learning in Azure Databricks

- Dec 25: Using Spark GraphFrames in Azure Databricks

Yesterday we looked into GraphFrames in Azure Databricks and the capabilities of calculating graph data.

Today we will look into Azure Machine Learning services.

What is Azure Machine Learning? It is a cloud-based environment you can use to train, deploy, automate, manage, and track ML models. It can be used for any kind of machine learning, from classical ML to deep learning, supervised, and unsupervised learning.

It supports Python and R code with the SDK and also gives you possibility to use Azure Machine Learning studio designer for “drag&drop” and no-code option. It supports also out-of-the-box tracking experiment, for prediction model workflow, managing, deploying and monitoring models with Azure Machine Learning.

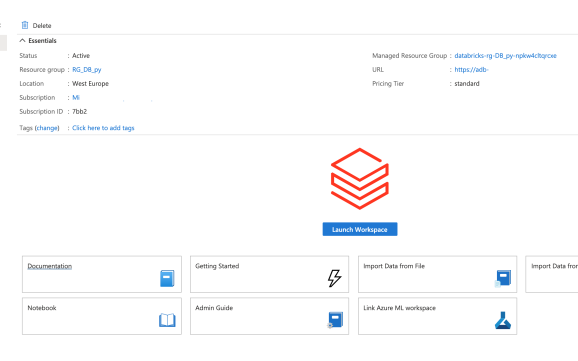

Login to your Azure Portal and select the Databricks services. On the main page of Databricks service in Azure Portal, select “Link Azure ML workspace”

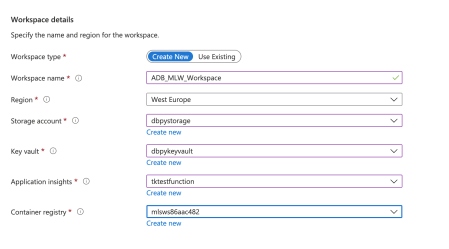

After selecting “Link Azure ML workspace”, you will be prompted to add additional information, mainly because we are connecting two separate services.

And many of these should already be available from previous days (Key Vault, Store Account, Container Registry). You should only create a new application insights.

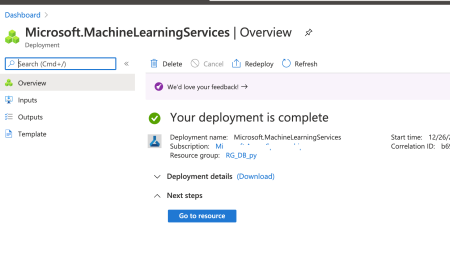

Click Review + create and Create.

Once completed you can download the deployment script or go directly to resource.

Among the resources, one new resource will be created for you. Go to this resource. You will see, that you will be introduced to Machine Learning Workspace and you can launch Studio.

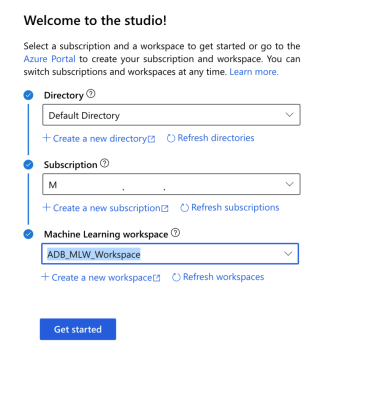

Since this is the first time setup of this new workspace that has connection to Azure Databricks, you will be prompted additional information about the Active Directory account:

Hit that “Get started” button to launch the Studio.

You will be presented with a brand new Machine Learning Workspace. After you instantiate your workspace, MLflow Tracking is automatically set to be tracked in all of the following places:

- The linked Azure Machine Learning workspace.

- Your original ADB workspace.

All your experiments land in the managed Azure Machine Learning tracking service.

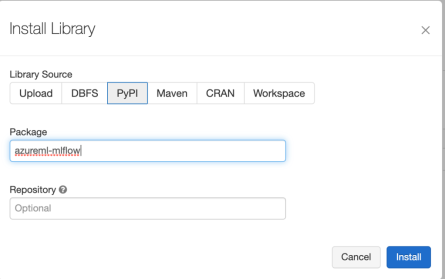

Now go to Azure Databricks and add some additional packages. In Azure Databricks under your cluster that we already used for MLflow, install package azureml-mlflow using PyPI.

Linking your ADB workspace to your Azure Machine Learning workspace enables you to track your experiment data in the Azure Machine Learning workspace.

The following code should be in your experiment notebook IN AZURE DATABRICKS (!) to get your linked Azure Machine Learning workspace.

import mlflow

import mlflow.azureml

import azureml.mlflow

import azureml.core

from azureml.core import Workspace

#Your subscription ID that you are running both Databricks and ML Service

subscription_id = 'subscription_id'

# Azure Machine Learning resource group NOT the managed resource group

resource_group = 'resource_group_name'

#Azure Machine Learning workspace name, NOT Azure Databricks workspace

workspace_name = 'workspace_name'

# Instantiate Azure Machine Learning workspace

ws = Workspace.get(name=workspace_name,

subscription_id=subscription_id,

resource_group=resource_group)

#Set MLflow experiment.

experimentName = "/Users/{user_name}/{experiment_folder}/{experiment_name}"

mlflow.set_experiment(experimentName)

If you want your models to be tracked and monitored only in Azure Machine Learning service, add these two lines in Databricks notebook:

uri = ws.get_mlflow_tracking_uri() mlflow.set_tracking_uri(uri)

Tomorrow we will look how to connect to your Azure databricks Service from your client machine or on-prem machine.

Complete set of code and the Notebook is available at the Github repository.

Happy Coding and Stay Healthy!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.