Advent of 2020, Day 14 – From configuration to execution of Databricks jobs

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Series of Azure Databricks posts:

- Dec 01: What is Azure Databricks

- Dec 02: How to get started with Azure Databricks

- Dec 03: Getting to know the workspace and Azure Databricks platform

- Dec 04: Creating your first Azure Databricks cluster

- Dec 05: Understanding Azure Databricks cluster architecture, workers, drivers and jobs

- Dec 06: Importing and storing data to Azure Databricks

- Dec 07: Starting with Databricks notebooks and loading data to DBFS

- Dec 08: Using Databricks CLI and DBFS CLI for file upload

- Dec 09: Connect to Azure Blob storage using Notebooks in Azure Databricks

- Dec 10: Using Azure Databricks Notebooks with SQL for Data engineering tasks

- Dec 11: Using Azure Databricks Notebooks with R Language for data analytics

- Dec 12: Using Azure Databricks Notebooks with Python Language for data analytics

- Dec 13: Using Python Databricks Koalas with Azure Databricks

Last four days we were exploring languages available in Azure Databricks. Today we will explore the Databricks jobs and what we can do with it, besides running a job.

1.Viewing a job

In the left vertical navigation bar, click the Job icon:

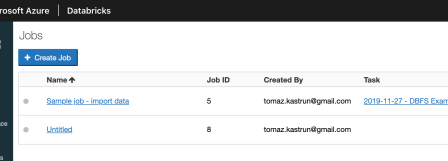

And you will get to the screen where you will be able to view all your jobs:

Jobs will have the following attributes (all are mostly self explanatory):

- Name – Name of the Databricks job

- Job ID – ID of the job and it is set automatically

- Created By – Name of the user (AD Username) who created a job

- Task – Name of the Notebook, that is attached and executed, when job is triggered. Task can also be a JAR file or a spark-submit command.

- Cluster – Name of the cluster, that is attached to this job. When job is fired, all the work will be done on this cluster.

- Schedule – CRON expression written in “readable” manner

- Last Run – Status of last run (E.g.: successful, failed, running)

- Action – buttons available to start or terminate the job.

2.Creating a job

If you would like to create a new job, use the “+Create job”:

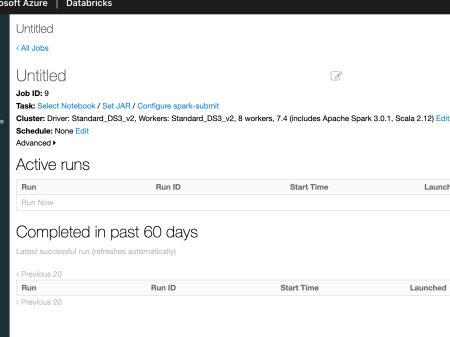

And you will be prompted with a new site, to fill in all the needed information:

Give job a name by renaming the “Untitled”. I named my job to “Day14_Job”. Next step is to make a task. A task can be:

- Selected Notebook (is a notebook that we have learned to use and create on Day 7)

- Set JAR file (is a program or function that can be executed using main class and arguments)

- Configure spark-submit (Spark-submit is a Apache Spark script to execute other applications on a cluster)

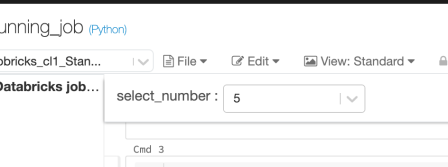

We will use a “Selected Notebook. So jump to workspaces and create a new Notebook. I have named mine as Day14_Running_job and it is running on Python. This is the following code, I have used:

Notebook is available in the same Github repository. And the code to this notebook is:

dbutils.widgets.dropdown("select_number", "1", [str(x) for x in range(1, 10)])

dbutils.widgets.get("select_number")

num_Select = dbutils.widgets.get('select_number')

for i in range(1, int(num_Select)):

print(i, end=',')

We have created a widget that looks like a dropdown menu in the head of notebook, a value (integer or string) that can be set once and called / used multiple-times in the notebook.

You can copy and paste the code to code cells or use the notebook in the github repository. We wil use notebook: Day14_Running_job with job: Day14_job.

Navigate back to jobs and you will see that Azure Databricks automatically saves the work progress for you. in the this job (Day14_job), select a notebook you have created.

We will need to add some additional parameters:

- Task – Parameter

- Dependent libraries

- Cluster

- Schedule

The Parameters, can be used with the Spark dbutils.widget command, the same we used in notebook. In this way we are giving notebook some external parametrization. Since We are not using any additional libraries, just attach the cluster. I am using an existing cluster and not creating new one (as offered by default) and select the schedule timing (my cron configuration is: 0 0 * * * ? and this is execute every full hour). At the end, my setup looks like:

Under the advanced features, you can also set the following:

- Alerts – set up the alerts on email (or multiple email addresses) on three event: on start, on success or on failure.

- Maximum Concurrent Runs – is the upper limit of how many concurrent runs of this job can be executing at the same time

- Timeout – specify the terminate time – the timeout (in minutes) of the running job

- Retries – number of retries if a job has failed

3.Executing a job

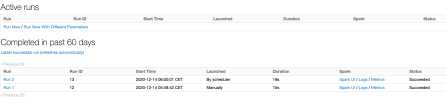

We leave all advanced setting empty by default. You can run job manually or leave it as it is and check if the CRON schedule will do the work. Nevertheless, I have ran one manually and left one to be run automatically.

You can also check the each run separately and see what has been executed. Each run has a specific ID and holds typical information, such as Duration, Start time, Status, Type and which Task was executed, end others.

But the best part of it, you have the results of the notebook also available and persistent in the Log of the job. This is useful especially if you don’t want to store results of your job to a file or table, but you need to only see them after run.

Do not forget to stop the running jobs, when you don’t needed any more. This is important due to several facts: jobs will be executed even if the clusters are not running. Thinking, that clusters are not started, but the job is still active, can generate some traffic and unwanted expenses. In addition, get the feeling, how long does the job run, so you can plan the cluster up/down time accordingly.

Tomorrow we will explore the Spark UI, metrics and logs that is available on Databricks cluster and job.

Complete set of code and Notebooks will be available at the Github repository.

Happy Coding and Stay Healthy!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.