Advent of 2020, Day 10 – Using Azure Databricks Notebooks with SQL for Data engineering tasks

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Series of Azure Databricks posts:

- Dec 01: What is Azure Databricks

- Dec 02: How to get started with Azure Databricks

- Dec 03: Getting to know the workspace and Azure Databricks platform

- Dec 04: Creating your first Azure Databricks cluster

- Dec 05: Understanding Azure Databricks cluster architecture, workers, drivers and jobs

- Dec 06: Importing and storing data to Azure Databricks

- Dec 07: Starting with Databricks notebooks and loading data to DBFS

- Dec 08: Using Databricks CLI and DBFS CLI for file upload

- Dec 09: Connect to Azure Blob storage using Notebooks in Azure Databricks

Yesterday we were working our way to get data from Azure Storage to Azure Databricks using dbfs mount function and sorted credentials (secret, key).

Log into Azure Databricks portal and create a new Notebook (day 7 explains how to create Notebook). In this notebook, we will use SQL to explore data engineering tasks. I have given my a name Day10_SQL_EngineeringTasks and selecting default Language Python. Attach a cluster to Notebook.

And here is the Notebook, and how it looks:

- Exploring Databases with SHOW

SHOW is a useful clause to see that your database holds.

%sql SHOW TABLES; %sql SHOW TABLES FROM default; %sql SHOW TABLES IN default LIKE 'day6*'

2, Creating database and getting information with DESCRIBE

Creating a database is simple, by defining the location and adding some information.

%sql CREATE DATABASE IF NOT EXISTS Day10 COMMENT 'This is a sample database for day10' LOCATION '/user';

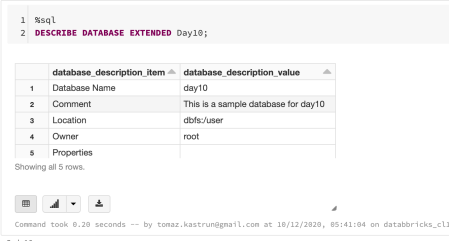

Getting some additional information can be done with DESCRIBE clause.

%sql DESCRIBE DATABASE EXTENDED Day10;

3. Creating tables and connecting it with CSV

For the underlying CSV we will create a table. We will be using CSV file from Day 6, and it should be still available on location dbfs:/FileStore/Day6_data_dbfs.csv. This dataset has three columns (Date, Temperature and City) and it should be good starting example.

%sql USE Day10; DROP TABLE IF EXISTS temperature; CREATE TABLE temperature (date STRING, mean_daily_temp STRING, city STRING)

And we can check the content of the table and the database:

%sql USE Day10; SELECT * FROM temperature %sql SHOW TABLES IN Day10;

And now connect CSV with the table (or view):

%sql USE Day10; DROP VIEW IF EXISTS temp_view2; CREATE TEMPORARY VIEW temp_view2 USING CSV OPTIONS (path "/FileStore/Day6Data_dbfs.csv", header "true", mode "FAILFAST")

And check the content:

%sql USE Day10; SELECT * FROM temp_view2

IF you would want to change the data type of a particular column, you can also do it as:

%sql USE Day10; ALTER TABLE temperature CHANGE COLUMN mean_daily_temp INT

4. Creating a JOIN between two tables

Let’s create two sample tables :

%sql USE Day10; DROP TABLE IF EXISTS temp1; DROP TABLE IF EXISTS temp2; CREATE TABLE temp1 (id_t1 INT, name STRING, temperature INT); CREATE TABLE temp2 (id_t2 INT, name STRING, temperature INT);

And add some insert statements:

%sql USE Day10; INSERT INTO temp1 VALUES (2, 'Ljubljana', 1); INSERT INTO temp1 VALUES (3, 'Seattle', 5); INSERT INTO temp2 VALUES (1, 'Ljubljana', -3); INSERT INTO temp2 VALUES (2, 'Seattle`', 3);

And create an inner join

%sql USE Day10; SELECT t1.Name as City1 ,t2.Name AS City2 ,t1.temperature*t2.Temperature AS MultipliedTemperature FROM temp1 AS t1 JOIN temp2 AS t2 ON t1.id_t1 = t2.id_t2 WHERE t1.name <> t2.name LIMIT 1

If you follow the notebook, you will find some additional information, but all in all, the HIVE SQL is ANSI compliant and getting started, should be no problem. When using notebook, each cell must have a language defined at the beginning, unless it is a language of kernel. %sql for SQL language, %md for Markdown, %r for R language, %scala for Scala. Beware, these language pointers are case sensitive, so %sql will interpret as SQL script, where as %SQL will return an error.

Tomorrow we will check and explore how to use R to do data engineering, but mostly the data analysis tasks. So, stay tuned.

Complete set of code and Notebooks will be available at the Github repository.

Happy Coding and Stay Healthy!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.