Passing two SQL queries to sp_execute_external_script

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Recently, I got a question on one of my previous blog posts, if there is possibility to pass two queries in same run-time as an argument to external procedure sp_execute_external_script.

Some of the arguments of the procedure sp_execute_external_script are enumerated. This is valid for the inputting dataset and as the name of argument @input_data_1 suggests, one can easily (and this is valid doubt) think, there can also be @input_data_2 argument, and so on. Unfortunately, this is not true. External procedure can hold only one T-SQL dataset, inserted through this parameter.

There are many reasons for that, one would be the cost of sending several datasets to external process and back, so inadvertently, this forces user to rethink and pre-prepare the dataset (meaning, do all the data munging beforehand), prior to sending it into external procedure.

But there are workarounds on how to pass additional query/queries to sp_execute_external_script. I am not advocating this, and I strongly disagree with such usage, but here it is.

First I will create two small datasets, using T-SQL

USE SQLR;

GO

DROP TABLE IF EXISTS dataset;

GO

CREATE TABLE dataset

(ID INT IDENTITY(1,1) NOT NULL

,v1 INT

,v2 INT

CONSTRAINT pk_dataset PRIMARY KEY (id)

)

SET NOCOUNT ON;

GO

INSERT INTO dataset(v1,v2)

SELECT TOP 1

(SELECT TOP 1 number FROM master..spt_values WHERE type IN ('EOB') ORDER BY NEWID()) AS V1

,(SELECT TOP 1 number FROM master..spt_values WHERE type IN ('EOD') ORDER BY NEWID()) AS v2

FROM master..spt_values

GO 50

This dataset will be used directly into @input_data_1 argument. The next one will be used through R code:

CREATE TABLE external_dataset

(ID INT IDENTITY(1,1) NOT NULL

,v1 INT

CONSTRAINT pk_external_dataset PRIMARY KEY (id)

)

SET NOCOUNT ON;

GO

INSERT INTO external_dataset(v1)

SELECT TOP 1

(SELECT TOP 1 number FROM master..spt_values WHERE type IN ('EOB') ORDER BY NEWID()) AS V1

FROM master..spt_values

GO 50

Normally, one would use a single dataset like:

EXEC sp_execute_external_script

@language = N'R'

,@script = N'OutputDataSet <- data.frame(MySet);'

,@input_data_1 = N'SELECT TOP 5 v1, v2 FROM dataset;'

,@input_data_1_name = N'MySet'

WITH RESULT SETS

((

Val1 INT

,Val2 INT

))

But by “injecting” the ODBC into R code, we can allow external procedure, to get back to your SQL Server and get additional dataset.

This can be done by following:

EXECUTE AS USER = 'RR';

GO

DECLARE @Rscript NVARCHAR(MAX)

SET @Rscript = '

library(RODBC)

myconn <-odbcDriverConnect("driver={SQL Server};

Server=SICN-KASTRUN;database=SQLR;uid=RR;pwd=Read!2$16")

External_source <- sqlQuery(myconn, "SELECT v1 AS v3

FROM external_dataset")

close(myconn)

Myset <- data.frame(MySet)

#Merge both datasets

mergeDataSet <- data.frame(cbind(Myset, External_source));'

EXEC sp_execute_external_script

@language = N'R'

,@script = @Rscript

,@input_data_1 = N'SELECT v1, v2 FROM dataset;'

,@input_data_1_name = N'MySet'

,@output_data_1_name = N'mergeDataSet'

WITH RESULT SETS

((

Val1 INT

,Val2 INT

,Val3 INT

))

REVERT;

GO

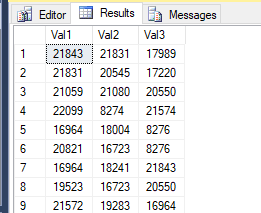

And the result will be merged two datasets, in total three columns:

which correspond to two datasets:

-- Check the results! SELECT * FROM dataset SELECT * FROM external_dataset

There are, as already mentioned, several opposing factors to this approach, and I would not recommend this. Some are:

- validating and keeping R code in one place

- performance issues

- additional costs of data transferring

- using ODBC connectors

- installing additional R packages (in my case RODBC package)

- keeping different datasets in one place

- security issues

- additional login/user settings

- firewall inbound/outbound rules setting

This, of course, can also be achieved with *.XDF file formats, if they are stored locally or on server as a files.

As always, code is available at Github.

Happy R-SQLing!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.