Express Intro to dplyr

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Working The Data Like a Boss !

I recently introduced the data.table package which provides a nice way to manage and aggregate large data sources using the standard bracket notation that is commonly employed when manipulating data frames in R. As data sources grow larger one must be prepared with a variety of approaches to efficiently handle this information. Using databases (both SQL and NoSQL) are a possibility wherein one queries for a subset of information although this assumes that the database is pre-existing or that you are prepared to create it yourself. The dplyr package offers ways to read in large files, interact with databases, and accomplish aggregation and summary. Some feel that dplyr is a competitor to the data.table package though I do not share that view. I think that each offers a well-conceived philosophy and approach and does a good job of delivering on their respective design goals. That there is overlap in their potential applications simply means to me that there is another way to do something. They are just great tools in a larger toolbox so I have no complaints. Let’s dig into dplyr to learn what it can do. Note that this post is part one of two. The second dplyr blog will apply the knowledge learned in this post.

Upcoming Class

Before we get too deep into this I wanted to indicate that I will be teaching a 3-day Intro to R BootCamp in the Atlanta, GA area of the US sometime in August or September. I say “sometime” because the logistics are still under development. If interested please feel free to email me and once I get everything lined up I will get back to you with the details. You can also visit my home page. Thanks for indulging my self-promotion. Steve – Now Back to the Action…

Verbs in Action !

dplyr is based on the idea that when working with data there are a number of common activities one will pursue: reading, filtering rows on some condition, selecting or excluding columns, arranging/sorting, grouping, summarize, merging/joining, and mutating/transforming columns. There are other activities but these describe the main categories. dplyr presents a number of commands or “verbs” that help you accomplish the work. Note that dplyr does not replace any existing commands – it simply gives you new commands:

| Command | Purpose |

|---|---|

| select() | Select columns from a data frame |

| filter() | Filter rows according to some condition(s) |

| arrange() | Sort / Re-order rows in a data frame |

| mutate() | Create new columns or transform existing ones |

| group_by() | Group a data frame by some factor(s) usually in conjunction to summary |

| summarize() | Summarize some values from the data frame or across groups |

| inner_join(x,y,by=”col”) | return all rows from ‘x’ where there are matching values in ‘x’, and all columns from ‘x’ and ‘y’. If there are multiple matches between ‘x’ and ‘y’, all combination of the matches are returned. |

| left_join(x,y,by=”col”) | return all rows from ‘x’, and all columns from ‘x’ and ‘y’. Rows in ‘x’ with no match in ‘y’ will have ‘NA’ values in the new columns. If there are multiple matches between ‘x’ and ‘y’, all combinations of the matches are returned. |

| right_join(x,y,by=”col”) | return all rows from ‘y’, and all columns from ‘x’ and y. Rows in ‘y’ with no match in ‘x’ will have ‘NA’ values in the new columns. If there are multiple matches between ‘x’ and ‘y’, all combinations of the matches are returned | anti_join(x,y,by=”col”) | return all rows from ‘x’ where there are not matching values in ‘y’, keeping just columns from ‘x’ |

readr

There is also an associated package called readr that is more efficient at ingesting CSV files than the base R functions such as read.csv. While it is not part of the actual dplyr package it does in fact produce a dplyr structure as it reads in files. readr provides the read_csv function to do the work. It is also pretty smart and can figure things out like if there is a header or not so you don’t have to provide a lot of additional arguments. Here is an example using a file that contains information on weather station measurements in the year 2013.

install.packages("readr") # one time only

library(readr)

url <- "http://steviep42.bitbucket.org/YOUTUBE.DIR/weather.csv"

download.file(url,"weather.csv")

system("head -5 weather.csv") # Take a peak at the first 5 lines

"origin","year","month","day","hour","temp","dewp","humid","wind_dir","wind_speed","wind_gust","precip","pressure","visib"

"EWR",2013,1,1,0,37.04,21.92,53.97,230,10.35702,11.9186514756,0,1013.9,10

"EWR",2013,1,1,1,37.04,21.92,53.97,230,13.80936,15.8915353008,0,1013,10

"EWR",2013,1,1,2,37.94,21.92,52.09,230,12.65858,14.5672406924,0,1012.6,10

"EWR",2013,1,1,3,37.94,23,54.51,230,13.80936,15.8915353008,0,1012.7,10

weather <- read_csv("weather.csv")

weather

Source: local data frame [8,719 x 14]

origin year month day hour temp dewp humid wind_dir wind_speed

(chr) (int) (int) (int) (int) (dbl) (dbl) (dbl) (int) (dbl)

1 EWR 2013 1 1 0 37.04 21.92 53.97 230 10.35702

2 EWR 2013 1 1 1 37.04 21.92 53.97 230 13.80936

3 EWR 2013 1 1 2 37.94 21.92 52.09 230 12.65858

4 EWR 2013 1 1 3 37.94 23.00 54.51 230 13.80936

5 EWR 2013 1 1 4 37.94 24.08 57.04 240 14.96014

6 EWR 2013 1 1 6 39.02 26.06 59.37 270 10.35702

7 EWR 2013 1 1 7 39.02 26.96 61.63 250 8.05546

8 EWR 2013 1 1 8 39.02 28.04 64.43 240 11.50780

9 EWR 2013 1 1 9 39.92 28.04 62.21 250 12.65858

10 EWR 2013 1 1 10 39.02 28.04 64.43 260 12.65858

.. ... ... ... ... ... ... ... ... ... ...

Variables not shown: wind_gust (dbl), precip (dbl), pressure (dbl), visib (dbl)

tbl_df

It is important to note that dplyr works transparently with existing R data frames though ideally one should explicitly create or transform an existing data frame to a dplyr structure to get the full benefit of the package. Let’s use the dplyr tbl_df command to wrap an existing data frame. We’ll convert the infamous mtcars data frame into a dplyr table since it is a small data frame that is easy to understand. The main advantage in using a ‘tbl_df’ over a regular data frame is the printing: tbl objects only print a few rows and all the columns that fit on one screen, describing the rest of it as text.

dp_mtcars <- tbl_df(mtcars) # dp_mtcars is a data frame as well as a dplyr object class(dp_mtcars) [1] "tbl_df" "tbl" "data.frame"

In the example below (as with the readr example above) notice how only a subset of the data gets printed by default. This is actually very nice especially if you have ever accidentally typed the name of a really, really large native data frame. R will dutifully try to print a large portion of the data even if it locks up your R session. So wrapping the data frame in a dplyr table will prevent this. Also notice how you get a summary of the number of rows and columns as well as the type of each column.

dp_mtcars

Source: local data frame [32 x 11]

mpg cyl disp hp drat wt qsec vs am gear carb

(dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl)

1 21.0 6 160.0 110 3.90 2.620 16.46 0 1 4 4

2 21.0 6 160.0 110 3.90 2.875 17.02 0 1 4 4

3 22.8 4 108.0 93 3.85 2.320 18.61 1 1 4 1

4 21.4 6 258.0 110 3.08 3.215 19.44 1 0 3 1

5 18.7 8 360.0 175 3.15 3.440 17.02 0 0 3 2

6 18.1 6 225.0 105 2.76 3.460 20.22 1 0 3 1

7 14.3 8 360.0 245 3.21 3.570 15.84 0 0 3 4

8 24.4 4 146.7 62 3.69 3.190 20.00 1 0 4 2

9 22.8 4 140.8 95 3.92 3.150 22.90 1 0 4 2

10 19.2 6 167.6 123 3.92 3.440 18.30 1 0 4 4

..

Now we could start to operate on this data frame / dplyr table by using some of the commands on offer from dplyr. They do pretty much what the name implies and you could use them in isolation though the power of dplyr comes through when using the piping operator to chain together commands. We’ll get there soon enough. Here are some basic examples:

filter()

# Find all rows where MPG is >= 30 and Weight is over 1.8 tons

filter(dp_mtcars, mpg >= 30 & wt > 1.8)

Source: local data frame [2 x 11]

mpg cyl disp hp drat wt qsec vs am gear carb

(dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl)

1 32.4 4 78.7 66 4.08 2.200 19.47 1 1 4 1

2 33.9 4 71.1 65 4.22 1.835 19.90 1 1 4 1

select()

The following example illustrates how the select() function works. We will select all columns whose name begins with the letter “m”. This is more useful when you have lots of columns that are named according to some pattern. For example some Public Health data sets can have many, many columns (hundreds even) so counting columns becomes impractical which is why select() supports a form of regular expressions to find columns by name. Other helpful arguments in this category include:

| Argument | Purpose |

|---|---|

| ends_with(x, ignore.case=TRUE) | Finds columns whose nqme ends with “x” |

| contains(x, ignore.case=TRUE) | Finds columns whose nqme contains “x” |

| matches(x, ignore.case=TRUE) | Finds columns whose names match the regular expression “x” |

| num_range(“x”,1:5, width=2) | selects all variables (numerically) from x01 to x05 |

| one_of(“x”, “y”, “z”) | Selects variables provided in a character vector |

select(dp_mtcars,starts_with("m"))

Source: local data frame [32 x 1]

mpg

(dbl)

1 21.0

2 21.0

3 22.8

4 21.4

5 18.7

6 18.1

7 14.3

8 24.4

9 22.8

10 19.2

# Get all columns except columns 5 through 10

select(dp_mtcars,-(5:10))

Source: local data frame [32 x 5]

mpg cyl disp hp carb

(dbl) (dbl) (dbl) (dbl) (dbl)

1 21.0 6 160.0 110 4

2 21.0 6 160.0 110 4

3 22.8 4 108.0 93 1

4 21.4 6 258.0 110 1

5 18.7 8 360.0 175 2

6 18.1 6 225.0 105 1

7 14.3 8 360.0 245 4

8 24.4 4 146.7 62 2

9 22.8 4 140.8 95 2

10 19.2 6 167.6 123 4

.. ... ... ... ... ...

mutate()

Here we use the mutate() function to transform the wt variable by multiplying it by 1,000 and then we create a new variable called “good_mpg” which takes on a value of “good” or “bad” depending on if a given row’s MPG value is > 25 or not

mutate(dp_mtcars, wt=wt*1000, good_mpg=ifelse(mpg > 25,"good","bad"))

Source: local data frame [32 x 12]

mpg cyl disp hp drat wt qsec vs am gear carb good_mpg

(dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (chr)

1 21.0 6 160.0 110 3.90 2620 16.46 0 1 4 4 bad

2 21.0 6 160.0 110 3.90 2875 17.02 0 1 4 4 bad

3 22.8 4 108.0 93 3.85 2320 18.61 1 1 4 1 bad

4 21.4 6 258.0 110 3.08 3215 19.44 1 0 3 1 bad

5 18.7 8 360.0 175 3.15 3440 17.02 0 0 3 2 bad

6 18.1 6 225.0 105 2.76 3460 20.22 1 0 3 1 bad

7 14.3 8 360.0 245 3.21 3570 15.84 0 0 3 4 bad

8 24.4 4 146.7 62 3.69 3190 20.00 1 0 4 2 bad

9 22.8 4 140.8 95 3.92 3150 22.90 1 0 4 2 bad

10 19.2 6 167.6 123 3.92 3440 18.30 1 0 4 4 bad

.. ... ... ... ... ... ... ... ... ... ... ... ...

arrange()

Next we could sort or arrange the data according to some column values. This is usually to make visual inspection of the data easier. Let’s sort the data frame by cars with the worst MPG and then sort by weight from heaviest to lightest.

arrange(dp_mtcars,mpg,desc(wt))

Source: local data frame [32 x 11]

mpg cyl disp hp drat wt qsec vs am gear carb

(dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl)

1 10.4 8 460.0 215 3.00 5.424 17.82 0 0 3 4

2 10.4 8 472.0 205 2.93 5.250 17.98 0 0 3 4

3 13.3 8 350.0 245 3.73 3.840 15.41 0 0 3 4

4 14.3 8 360.0 245 3.21 3.570 15.84 0 0 3 4

5 14.7 8 440.0 230 3.23 5.345 17.42 0 0 3 4

6 15.0 8 301.0 335 3.54 3.570 14.60 0 1 5 8

7 15.2 8 275.8 180 3.07 3.780 18.00 0 0 3 3

8 15.2 8 304.0 150 3.15 3.435 17.30 0 0 3 2

9 15.5 8 318.0 150 2.76 3.520 16.87 0 0 3 2

10 15.8 8 351.0 264 4.22 3.170 14.50 0 1 5 4

.. ... ... ... ... ... ... ... ... ... ... ...

Ceci n’est pas une pipe

While the above examples are instructive they are not, at least in my opinion, the way to best use dplyr. Once you get up to speed with dplyr functions I think you will soon agree that using “pipes” to create chains of commands is the way to go. If you come from a UNIX background you will no doubt have heard of “pipes” which is a construct allowing you to take the output of one command and route it or “pipe” it to the input of another program. This can be done for several commands thus creating a chain of piped commands. One can save typing while creating, in effect, a “one line program” that does a lot. Here is an example of a UNIX pipeline. I’m using Apple OSX but this should work on a Linux machine just as well. This example will pipe the output of the /etc/passwd file into the input of the awk command (a program used to parse files) and the output of the awk command will go into the input of the tail command which lists the last 10 lines of the final result.

$ cat /etc/passwd | awk -F: '{print $1}' | tail

_krb_krbtgt

_krb_kadmin

_krb_changepw

_krb_kerberos

_krb_anonymous

_assetcache

_coremediaiod

_xcsbuildagent

_xcscredserver

_launchservicesd

$

This is a great paradigm for working on UNIX that also maps well for what one does in data exploration. When first encountering data you rarely know what it is you want to get from it (unless you are a student and your teacher told you specifically what she or he wants). So you embark on some exploratory work and start to interrogate the data which might first require some filtering and maybe exclusion of incomplete data or maybe some imputation for missing values. Until you have worked with it for a while you don’t want to change the data – you just want to experiment with various transformed and grouped versions of it which is much easier if you use dplyr. Just pipe various commands together to clean up your data, make some visualizations, and perhaps generate some hypotheses about your data. You find yourself generating some pretty involved adhoc command chains without having to create a standalone script file. The dplyr package uses the magrittr package to enable this piping capability within R. The “pipe” character is “%>%” which is different from the traditional UNIX pipe which is the vertical bar “|”. But don’t let the visual difference confuse you as, conceptually, pipes in R work just like they do in UNIX. The magrittr package has a motto “Ceci n’est pas une pipe” presumably in acknowledgement of the noted difference and also as a tribute to the painter Rene Magritte’s work La trahison des images.

# Here we filter rows where MPG is >= 25 and then select only rows 1-4

# and 10-11.

dp_mtcars %>% filter(mpg >= 25) %>% select(-c(5:9))

Source: local data frame [6 x 6]

mpg cyl disp hp gear carb

(dbl) (dbl) (dbl) (dbl) (dbl) (dbl)

1 32.4 4 78.7 66 4 1

2 30.4 4 75.7 52 4 2

3 33.9 4 71.1 65 4 1

4 27.3 4 79.0 66 4 1

5 26.0 4 120.3 91 5 2

6 30.4 4 95.1 113 5 2

Next we filter rows where MPG is >= 25 and then select only rows 1-4 and 10-11 after which we sort the result by MPG from highest to lowest. You can keep adding as many pipes as you wish. At first, while you are becoming familiar with the idea, it is best to keep the pipeline relatively short so you can check your work. But it will not be long before you are stringing together lots of different commands. dplyr enables and encourages this type of activity so don’t be shy.

dp_mtcars %>% filter(mpg >= 25) %>% select(-c(5:9)) %>% arrange(desc(mpg))

Source: local data frame [6 x 6]

mpg cyl disp hp gear carb

(dbl) (dbl) (dbl) (dbl) (dbl) (dbl)

1 33.9 4 71.1 65 4 1

2 32.4 4 78.7 66 4 1

3 30.4 4 75.7 52 4 2

4 30.4 4 95.1 113 5 2

5 27.3 4 79.0 66 4 1

6 26.0 4 120.3 91 5 2

That was pretty cool wasn’t it ? We don’t need to alter dp_mtcars at all to explore it. We could change our minds about how and if we want to filter, select, or sort. The way this works is that the output of the dp_mtcars data frame/table gets sent to the input of the filter function that is aware of the source which is why we don’t need to explicitly reference dp_mtcars by name. The output of the filter step gets sent to the select function which in turns pipes or chains its output into the input of the arrange function which sends its output to the screen. We could even pipe the output of these operations to the ggplot2 package. But first let’s convert some of the columns into factors so the resulting plot will look better.

# Turn the cyl and am variables into factors. Notice that the resulting

# output reflects the change

dp_mtcars %>%

mutate(cyl=factor(cyl,levels=c(4,6,8)),

am=factor(am,labels=c("Auto","Manual" )))

mpg cyl disp hp drat wt qsec vs am gear carb

(dbl) (fctr) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (fctr) (dbl) (dbl)

1 21.0 6 160.0 110 3.90 2.620 16.46 0 Manual 4 4

2 21.0 6 160.0 110 3.90 2.875 17.02 0 Manual 4 4

3 22.8 4 108.0 93 3.85 2.320 18.61 1 Manual 4 1

4 21.4 6 258.0 110 3.08 3.215 19.44 1 Auto 3 1

5 18.7 8 360.0 175 3.15 3.440 17.02 0 Auto 3 2

6 18.1 6 225.0 105 2.76 3.460 20.22 1 Auto 3 1

7 14.3 8 360.0 245 3.21 3.570 15.84 0 Auto 3 4

8 24.4 4 146.7 62 3.69 3.190 20.00 1 Auto 4 2

9 22.8 4 140.8 95 3.92 3.150 22.90 1 Auto 4 2

10 19.2 6 167.6 123 3.92 3.440 18.30 1 Auto 4 4

.. ... ... ... ... ... ... ... ... ... ... ...

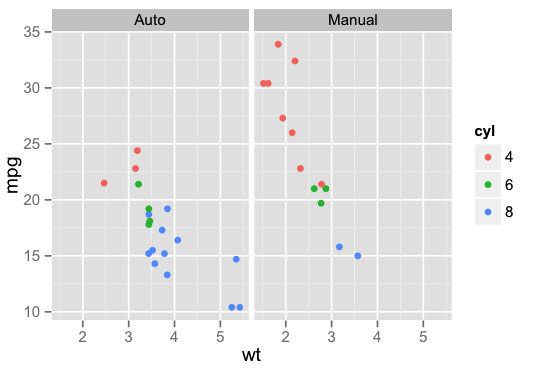

But that was kind of boring – Let’s visualize this using the ggplot package whose author, Hadley Wickham, is also the author of dplyr.

dp_mtcars %>% mutate(cyl=factor(cyl,levels=c(4,6,8)),

am=factor(am,labels=c("Auto","Manual" ))) %>%

ggplot(aes(x=wt,y=mpg,color=cyl)) +

geom_point() + facet_wrap(~am)

Okay well that might have been too much for you and that’s okay if it is. Let’s break this down into two steps. First let’s save the results of the mutate operation into a new data frame.

new_dp_mtcars <- dp_mtcars %>% mutate(cyl=factor(cyl,levels=c(4,6,8)),

am=factor(am,labels=c("Auto","Manual" )))

# Now we can call the ggplot command separately

ggplot(new_dp_mtcars,aes(x=wt,y=mpg,color=cyl)) +

geom_point() + facet_wrap(~am)

Pick whatever approach you want to break things down to the level you need. However, I guarantee that after a while you will probably wind up writing lots of one line programs.

Split-Apply-Combine

There are two more commands from the dplyr package that are particularly useful in aggregating data. The group_by() and summarize() functions help us group a data frame according to some factors and then apply some summary functions across those groups. The idea is to first “split” the data into groups, “apply” some functions (e.g. mean()) to some continuous quantity relating to each group, and then combine those group specific results back into an integrated result. In the next example we will group (or split) the data frame by the cylinder variable and then summarize the mean MPG for each group and then combine that into a final aggregated result.

dp_mtcars %>% group_by(cyl) %>% summarize(avg_mpg=mean(mpg))

Source: local data frame [3 x 2]

cyl avg_mpg

(dbl) (dbl)

1 4 26.66364

2 6 19.74286

3 8 15.10000

# Let's group by cylinder then by transmission type and then apply the mean

# and sd functions to mpg

dp_mtcars %>% group_by(cyl,am) %>% summarize(avg_mpg=mean(mpg),sd=sd(mpg))

Source: local data frame [6 x 4]

Groups: cyl [?]

cyl am avg_mpg sd

(dbl) (dbl) (dbl) (dbl)

1 4 0 22.90000 1.4525839

2 4 1 28.07500 4.4838599

3 6 0 19.12500 1.6317169

4 6 1 20.56667 0.7505553

5 8 0 15.05000 2.7743959

6 8 1 15.40000 0.5656854

# Note that just grouping a data frame without summary doesn't appear to do

# much from a visual point of view.

dp_mtcars %>% group_by(cyl)

Source: local data frame [32 x 11]

Groups: cyl [3]

mpg cyl disp hp drat wt qsec vs am gear carb

(dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl) (dbl)

1 21.0 6 160.0 110 3.90 2.620 16.46 0 1 4 4

2 21.0 6 160.0 110 3.90 2.875 17.02 0 1 4 4

3 22.8 4 108.0 93 3.85 2.320 18.61 1 1 4 1

4 21.4 6 258.0 110 3.08 3.215 19.44 1 0 3 1

5 18.7 8 360.0 175 3.15 3.440 17.02 0 0 3 2

6 18.1 6 225.0 105 2.76 3.460 20.22 1 0 3 1

7 14.3 8 360.0 245 3.21 3.570 15.84 0 0 3 4

8 24.4 4 146.7 62 3.69 3.190 20.00 1 0 4 2

9 22.8 4 140.8 95 3.92 3.150 22.90 1 0 4 2

10 19.2 6 167.6 123 3.92 3.440 18.30 1 0 4 4

.. ... ... ... ... ... ... ... ... ... ... ...

Merging Data Frames

One of the strengths of dplyr is it’s ability to do merges via various “joins” like those associated with database joins. There is already a built-in R command called merge that can handle merging duties but dplyr offers flexible and extended capabilities in this regard. Moreover it does so in a way that is consistent (for the most part) with SQL which you can use for a wife variety of data mining tasks. If you already know SQL then you will understand these commands without much effort. Let’s set up two example simple data frames to explain the concept of joining.

df1 <- data.frame(id=c(1,2,3),m1=c(0.98,0.45,0.22)) df2 <- data.frame(id=c(3,4),m1=c(0.17,0.66)) df1 id m1 1 1 0.98 2 2 0.45 3 3 0.22 df2 id m1 1 3 0.17 2 4 0.66

Left Join

Think about what it means to merge these data frames. It makes sense to want to join the data frames with respect to some common column name. In this case it is clear that the id column is in both data frames. So let’s join the data frames using “id” as a “key”. The question is what to do about the fact that there is no id in df2 corresponding to id number 2. This is why different types of joins exist. Let’s see how they work. We’ll start with the left join:

left_join(df1,df2,by="id") id m1.x m1.y 1 1 0.98 NA 2 2 0.45 NA 3 3 0.22 0.17

So the left join looks at the first data frame df1 and then attempts to find corresponding “id” values in df2 that match all id values in df1. Of course there are no ids matching 2 or 3 in df2 so what happens ? The left join will insert NAs in the m1.y column since there are no values in df2. Note that there is in fact an id of value 3 in both data frames so it fills in both measurement columns with the values. Also note that since in both data frames there is a column named “m1” so it has to create unique names to accommodate both columns. The “x” and “y” come from the fact that df1 comes before df2 in the calling sequence to left_join. Thus “x” matches df1 and “y” matches df2.

Inner Join

Let’s join the two data frames in a way that yields only the intersection of the two data structures based on “id”. Using visual examination we can see that there is only one id in common to both data frames – id 3.

inner_join(df1,df2,by="id") id m1.x m1.y 1 3 0.22 0.17

More Involved Join Examples

Now we’ll look at a more advanced example. Let’s create two data frames where the first, (we’ll call it “authors”), presents a list of, well, authors. The second data frame presents a list of books published by various authors. Each data frame has some additional attributes of interest.

# For reference sake - these data frames come from the examples contained in

# the help pages for the built-in R merge command

authors <- data.frame(

surname = I(c("Tukey", "Venables", "Tierney", "Ripley", "McNeil")),

nationality = c("US", "Australia", "US", "UK", "Australia"),

deceased = c("yes", rep("no", 4)))

books <- data.frame(

name = I(c("Tukey", "Venables", "Tierney",

"Ripley", "Ripley", "McNeil", "R Core")),

title = c("Exploratory Data Analysis",

"Modern Applied Statistics ...",

"LISP-STAT",

"Spatial Statistics", "Stochastic Simulation",

"Interactive Data Analysis",

"An Introduction to R"),

other.author = c(NA, "Ripley", NA, NA, NA, NA,

"Venables & Smith"))

authors

surname nationality deceased

1 Tukey US yes

2 Venables Australia no

3 Tierney US no

4 Ripley UK no

5 McNeil Australia no

books

name title other.author

1 Tukey Exploratory Data Analysis <NA>

2 Venables Modern Applied Statistics ... Ripley

3 Tierney LISP-STAT <NA>

4 Ripley Spatial Statistics <NA>

5 Ripley Stochastic Simulation <NA>

6 McNeil Interactive Data Analysis <NA>

7 R Core An Introduction to R Venables & Smith

At first glance it appears that there is nothing in common between these two data frames in terms of column names. However, it is fairly obvious that the “surname” column in the authors data frame matches the “name” column in books so we could probably use those as keys to join the two data frames. We also see that there is an author ,”R Core” (meaning the R Core Team), who appears in the books table though is not listed as an author in the authors data frame. This kind of thing happens all the time in real life so better get used to it. Let’s do some reporting using these two data frames:

Let’s find all authors listed in the authors table who published a book along with their book titles, other authors, nationality, and living status. Let’s try an inner join on this. Because we don’t have any common column names between books and authors we have to tell the join what columns to use for matching. The by argument exists for this purpose. Note also that the author “R Core” listed in books isn’t printed here because that author does not also exist in the authors table. This is because the inner join looks for the intersection of the tables.

inner_join(books,authors,by=c("name"="surname"))

name title other.author nationality deceased

1 Tukey Exploratory Data Analysis <NA> US yes

2 Venables Modern Applied Statistics ... Ripley Australia no

3 Tierney LISP-STAT <NA> US no

4 Ripley Spatial Statistics <NA> UK no

5 Ripley Stochastic Simulation <NA> UK no

6 McNeil Interactive Data Analysis <NA> Australia no

# We could have also done a right join since this will require a result that has

# all rows form the "right" data frame (in the "y" position) which in this case is

# authors

right_join(books,authors,by=c("name"="surname"))

name title other.author nationality deceased

1 Tukey Exploratory Data Analysis <NA> US yes

2 Venables Modern Applied Statistics ... Ripley Australia no

3 Tierney LISP-STAT <NA> US no

4 Ripley Spatial Statistics <NA> UK no

5 Ripley Stochastic Simulation <NA> UK no

6 McNeil Interactive Data Analysis <NA> Australia no

Next, find any and all authors who published a book even if they do not appear in the authors table. The result should show names, titles, other authors, nationality, and living status. Let’s do a left join which will pull in all rows from “x” (books) and where there is no matching key/name in authors then NAs will be inserted for columns existing in the “y” (authors) table.

left_join(books,authors,by=c("name"="surname"))

name title other.author nationality deceased

1 Tukey Exploratory Data Analysis <NA> US yes

2 Venables Modern Applied Statistics ... Ripley Australia no

3 Tierney LISP-STAT <NA> US no

4 Ripley Spatial Statistics <NA> UK no

5 Ripley Stochastic Simulation <NA> UK no

6 McNeil Interactive Data Analysis <NA> Australia no

7 R Core An Introduction to R Venables & Smith <NA> <NA>

Do the same as above but the result should show only the book title and name columns

in that order. This is simply a matter of doing the previous join and piping the result to a filter statement

left_join(books,authors,by=c("name"="surname")) %>% select(title,name)

title name

1 Exploratory Data Analysis Tukey

2 Modern Applied Statistics ... Venables

3 LISP-STAT Tierney

4 Spatial Statistics Ripley

5 Stochastic Simulation Ripley

6 Interactive Data Analysis McNeil

7 An Introduction to R R Core

Find the book names of all US authors and who are not deceased. Well first we filter the authors table to filter out rows according the specified conditions. Then we can pass the result to an inner_join() statement to get the book titles and then we pass that result to select only the book titles. Note that because we are piping the output from the filter() results we don’t need to specify that in the call to inner_join(). That is, the inner_join function assumes that the filter() results represent the “x” position in the call to inner_join()

authors %>% filter(deceased == "no" & nationality == "US") %>%

inner_join(books,by=c("surname"="name")) %>% select(title)surname

title

1 LISP-STAT

Find any book titles for authors who do not appear in the authors data frame. Here we use an anti_join() which returns all rows from books where there are no matching values in authors, keeping just columns from books – and then we pass that result to select for title and name

anti_join(books,authors,by=c("name"="surname")) %>% select(title,name)

title name

1 An Introduction to R R Core

Up Next – Biking in San Francisco

That’s it for now and we have covered a lot of ground in one go although once you invest some time in playing with dplyr (especially the pipes) then it becomes difficult to go back to the “old ways” of doing things. Next up we will look at some “real” data and apply our new found knowledge to working with it. The data set actually comes from the Kaggle Project page for the San Francisco Bay Area Bike Share Service. The data is about 660MB and you can download it though you will need a Kaggle account. You might want to go ahead and download that data in anticipation of the next posting.

Filed under: Data Mining Tagged: database joins, dplyr

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.