Data Cleaning Part 1 – NYC Taxi Trip Data, Looking For Stories Behind Errors

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Summary

- Data cleaning is a cumbersome but important task for Data Science project in reality.

- This is a discussion on my practice of data cleaning for NYC Taxi Trip data.

- There are lots of domain knowledge, common sense and business thinking involved.

Data Cleaning, the unavoidable, time consuming, cumbersome nontrivial task

Data Science may sound fancy, but I saw many posts/blogs of data scientists complaining that much of their time were spending on data cleaning. From my own experience on several learning/volunteer projects, this step do require lots of time and much attention to details. However I often felt the abnormal or wrong data are actually more interesting. There must be some explanations behind the error, and that could be some interesting stories. Every time after I filtered some data with errors, I can have better understanding of the whole picture and estimate of the information content of the data set.

NYC Taxi Trip Data

One good example is the the NYC Taxi Trip Data.

By the way, this analysis and exploration is pretty impressive. I think it’s partly because the author is NYC native and already have lots of possible pattern ideas in mind. For same reason I like to explore my local area of any national data to gain more understandings from the data. Besides, it turned out that you don’t even need a base map layer for the taxi pickup point map when you have enough data points. The pickup points themselves shaped all the streets and roads!

First I prepared and merged the two data file, trip data and trip fare.

library(data.table)

library(stringr)

library(lubridate)

library(geosphere)

library(ggplot2)

library(ggmap)

## ------------------ read and check data ---------------------------------

trip.data = fread("trip_data_3.csv", sep = ',', header = TRUE, showProgress = TRUE)

trip.fare = fread("trip_fare_3.csv", sep = ',', header = TRUE, showProgress = TRUE)

summary(trip.data)

summary(trip.fare)

## ------------------ column format -----------------------

## all conversion were done on new copy first to make sure it was done right,

## then the original columns were overwrite in place to save memory

# remove leading space in column names from fread

setnames(trip.data, str_trim(colnames(trip.data)))

setnames(trip.fare, str_trim(colnames(trip.fare)))

# convert characters to factor to verify missing values, easier to observe

trip.data[, medallion := as.factor(medallion)]

trip.data[, hack_license := as.factor(hack_license)]

trip.data[, vendor_id := as.factor(vendor_id)]

trip.data[, store_and_fwd_flag := as.factor(store_and_fwd_flag)]

trip.fare[, medallion := as.factor(medallion)]

trip.fare[, hack_license := as.factor(hack_license)]

trip.fare[, vendor_id := as.factor(vendor_id)]

trip.fare[, payment_type := as.factor(payment_type)]

# date time conversion.

trip.data[, pickup_datetime := fast_strptime(pickup_datetime,"%Y-%m-%d %H:%M:%S")]

trip.data[, dropoff_datetime := fast_strptime(dropoff_datetime,"%Y-%m-%d %H:%M:%S")]

trip.fare[, pickup_datetime := fast_strptime(pickup_datetime,"%Y-%m-%d %H:%M:%S")]

## ------------- join two data set by pickup_datetime, medallion, hack_license -------------

# after join by 3 columns, all vendor_id also matches:

# trip.all[vendor_id.x == vendor_id.y, .N] so add vendor_id to key too.

setkey(trip.data, pickup_datetime, medallion, hack_license, vendor_id)

setkey(trip.fare, pickup_datetime, medallion, hack_license, vendor_id)

# we can add transaction number to trip and fare so we can identify missed match more easily

trip.data[, trip_no := .I]

trip.fare[, fare_no := .I]

trip.all = merge(trip.data, trip.fare, all = TRUE, suffixes = c(".x", ".y"))

|

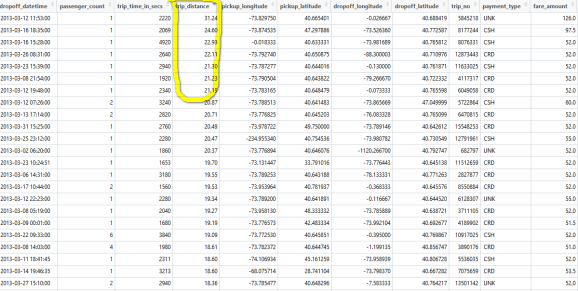

Then I found many obvious data errors.

Some columns have obvious wrong values, like zero passenger count.

Though the other columns look perfectly normal. As long as you are not using passenger count information, I think these rows are still valid.

Another interesting phenomenon is the super short trip:

short = trip.all[trip_time_in_secs <10][order(total_amount)] View(short) |

One possible explanation I can imagine is that maybe some passengers get on taxi then get off immediately, so the time and distance is near zero and they paid the minimal fare of $2.5. Many rows do have zero for pickup or drop off location or almost same location for pick up and drop off.

Then how is the longer trip distance possible? Especially when most pick up and drop off coordinates are either zero or same location. Even if the taxi was stuck in traffic so there is no location change and trip distance recorded by the taximeter, the less than 10 seconds trip time still cannot be explained.

- There are also quite some big value trip fares for very short trips. Most of them have pick up and drop off coordinates at zero or at same locations.

I don’t have good explanations for these phenomenon and I don’t want to make too many assumptions since I’m not really familiar with NYC taxi trips. I guess a NYC local probably can give some insights on them, and we can verify them with data.

Average driving speed

We can further verify the trip time/distance combination by checking the average driving speed. The near zero time or distance could cause too much variance in calculated driving speed. Considering the possible input error in time and distance, we can round up the time in seconds to minutes before calculating driving speed.

First check on the records that have very short time and nontrivial trip distance:

distance.conflict = trip.all[trip_time_in_secs < 10 & trip_distance > 0.5][order(trip_distance)] |

If the pick up and drop off coordinates are not empty, we can calculate the great-circle distance between the coordinates. The actual trip distance must be equal or bigger than this distance.

distance.conflict.with.gps = distance.conflict[pickup_longitude != 0 & pickup_latitude != 0 & dropoff_longitude != 0 & dropoff_latitude != 0] gps.mat = as.matrix(distance.conflict.with.gps[, .(pickup_longitude, pickup_latitude, dropoff_longitude, dropoff_latitude)]) distance.conflict.with.gps[, dis.by.gps.meter := distHaversine(gps.mat[, 1:2],gps.mat[, 3:4])][order(dis.by.gps.meter)] distance.conflict.with.gps[, dis.by.gps.mile := dis.by.gps.meter * 0.000621371] |

If both the great-circle distance and trip distance are nontrivial, it’s more likely the less than 10 seconds trip time are wrong.

wrong.time = distance.conflict.with.gps[dis.by.gps.mile >= 0.5] View(wrong.time[, .(trip_time_in_secs, trip_distance, fare_amount, dis.by.gps.mile)]) |

And there must be something wrong if the great-circle distance is much bigger than the trip distance. Note the data here is limited to the short trip time subset, but this type of error can happen in all records.

Either the taximeter had some errors in reporting trip distance, or the gps coordinates were wrong. Because all the trip time very short, I think it’s more likely to be the problem with gps coordinates. And the time and distance measurement should be much simpler and reliable than the gps coordinates measurement.

gps coordinates distribution

We can further check the accuracy of the gps coordinates by matching with NYC boundary. The code below is a simplified method which take center of NYC area then add 100 miles in four directions as the boundary. More sophisticated way is to use a shapefile, but it will be much slower in checking data points. Since the taxi trip actually can have at least one end outside of NYC area, I don’t think we need to be too strict on NYC area boundary.

trip.valid.gps = trip.all[pickup_longitude != 0 & pickup_latitude != 0 &

dropoff_longitude != 0 & dropoff_latitude != 0]

nyc.lat = 40.719681 # picked "center of NYC area" from google map

nyc.lon = -74.00536

nyc.lat.max = nyc.lat + 100/69

nyc.lat.min = nyc.lat - 100/69

nyc.lon.max = nyc.lon + 100/52

nyc.lon.min = nyc.lon - 100/52

trip.valid.gps.nyc = trip.valid.gps[nyc.lon.max > pickup_longitude & pickup_longitude > nyc.lon.min &

nyc.lon.max > dropoff_longitude & dropoff_longitude > nyc.lon.min &

nyc.lat.max > pickup_latitude & pickup_latitude > nyc.lat.min &

nyc.lat.max > dropoff_latitude & dropoff_latitude > nyc.lat.min]

View(trip.valid.gps[!trip.valid.gps.nyc][order(trip_distance)])

mat.nyc = as.matrix(trip.valid.gps.nyc[, .(pickup_longitude, pickup_latitude, dropoff_longitude, dropoff_latitude)])

dis = distHaversine(mat.nyc[, 1:2],mat.nyc[, 3:4]) / 1639.344

trip.valid.gps.nyc[, dis.by.gps := dis]

|

I found another verification on gps coordinates when I was checking the trips started from the JFK airport. Note I used two reference points in JFK airport to better filter all the trips that originated from inside the airport and the immediate neighborhood of JFK exit.

# the official loc of JFK is too far on east, we choose 2 point to better represent possible pickup areas. jfk.inside = data.frame(lon = -73.783074, lat = 40.64561) jfk.exit = data.frame(lon = -73.798523, lat = 40.658439) jfk.map = get_map(location = unlist(jfk.inside), zoom = 13, maptype = 'roadmap') # rides from JFK could end at out of NYC, but there are too many obvious wrong gps information in that part of data, we will just use the data that have gps location in NYC area this time. This area is actually rather big, a square area with 200 miles edge. trip.valid.gps.nyc[, dis.jfk.center.meter := distHaversine(mat.nyc[, 1:2], jfk.inside)] trip.valid.gps.nyc[, dis.jfk.exit.meter := distHaversine(mat.nyc[, 1:2], jfk.exit)] # the actual distance threshold is adjusted by visual checking the map below, so that it includes most rides picked up from JFK, and excludes rides in neighborhood but not from JFK. near.jfk = trip.valid.gps.nyc[dis.jfk.center.meter < 2500 | dis.jfk.exit.meter < 1200] ggmap(jfk.map) +geom_point(data = rbind(jfk.inside, jfk.exit), aes(x = lon, y = lat)) + geom_point(data = near.jfk, aes(x = pickup_longitude, y = pickup_latitude, colour = 'red')) |

Interestingly, there are some pick up points in the airplane runway or the bay. These are obvious errors, actually I think gps coorindates report in big city could have all kinds of error.

Superman taxi driver

I also found some interesting records in checking taxi driver revenue.

trip.march = trip.all[month(dropoff_datetime) == 3] revenue = trip.march[, .(revenue.march = sum(total_amount)), by = hack_license] summary(revenue$revenue.march) |

Min. 1st Qu. Median Mean 3rd Qu. Max. 2.6 4955.0 7220.0 6871.0 9032.0 43770.0 |

Who are these superman taxi driver that earned significantly more?

tail(revenue[order(revenue.march)]) |

hack_license revenue.march 1: 3AAB94CA53FE93A64811F65690654649 21437.62 2: 74CC809D28AE726DDB32249C044DA4F8 22113.14 3: F153D0336BF48F93EC3913548164DDBD 22744.56 4: D85749E8852FCC66A990E40605607B2F 23171.50 5: 847349F8845A667D9AC7CDEDD1C873CB 23366.48 6: CFCD208495D565EF66E7DFF9F98764DA 43771.85 |

View(trip.all[hack_license == 'CFCD208495D565EF66E7DFF9F98764DA']) |

So this driver were using different medallion with same hack license, picked up 1412 rides in March, some rides even started before last end(No.17, 18, 22 etc). The simplest explanation is that these records are not from one single driver.

rides = trip.march[, .N, by = hack_license] summary(rides) tail(rides[order(N)]) |

hack_license N 1: 74CC809D28AE726DDB32249C044DA4F8 1514 2: 51C1BE97280A80EBFA8DAD34E1956CF6 1530 3: 5C19018ED8557E5400F191D531411D89 1575 4: 847349F8845A667D9AC7CDEDD1C873CB 1602 5: F49FD0D84449AE7F72F3BC492CD6C754 1638 6: D85749E8852FCC66A990E40605607B2F 1649 |

These hack license owner picked up more than 1500 rides in March, that’s 50 per day.

We can further check if there is any time overlap between drop off and next pickup, or if the pick up location was too far from last drop off location, but I think there is no need to do that before I have better theory.

Summary

In this case I didn’t dig too much yet because I’m not really familiar with NYC taxi, but there are lots of interesting phenomenons already. We can know a lot about the quality of certain data fields from these errors.

In my other project, data cleaning is not just about digging interesting stories. It actually helped with the data process a lot. See more details in my next post.

Version History

- 2016-01-31 : First version.

- 2016-05-11 : Added Summary.

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.